a9s Backup Process

This document describes the supported properties for the a9s Backup Service.

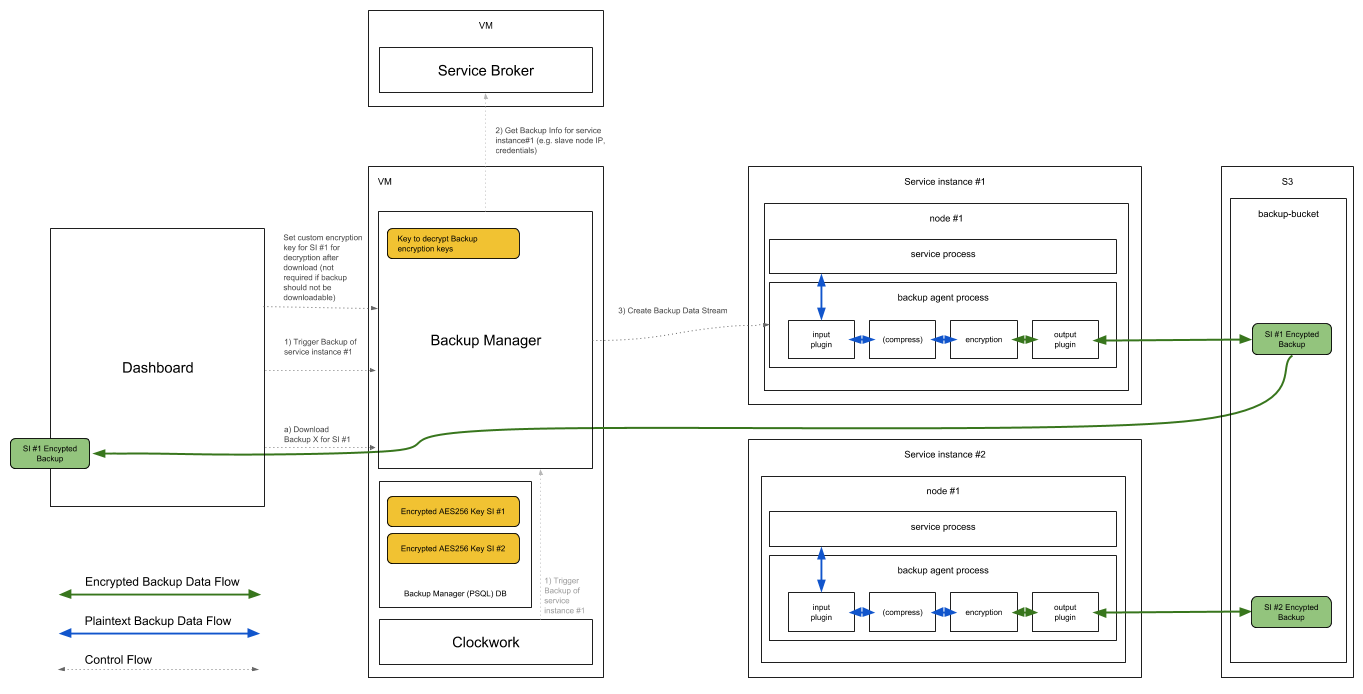

By creating a new backup of an active service instance using the a9s service dashboard, the endpoint /backup_agent/backup of the a9s backup manager will be triggered.

Afterwards, the backup manager starts the backup process of the selected service instance by inserting the backup job into the backup agent queue. The backup agent, co-located with the deployment of the service instance itself, start to create the backup by connecting to the virtual machine(s) of the service instance.

Subsequently, the created backup will be first compressed and encrypted using the encryption key, defined by the user using the a9s service dashboard. Finally, the backup will be uploaded to the configured backup store (e.g. AWS S3).

While the a9s Backup Framework supports AWS S3 as a backup store, this does not extend to every AWS S3 Storage Class. Currently, the supported AWS S3 storage class is S3 Standard.

Restore a Backup

By restoring a service instance using the a9s service dashboard, the endpoint /backup_agent/restores of the a9s Backup Manager will be triggered.

The backup manager handles the incoming request and triggers the restore of the service instance by inserting the restore job into the backup agent queue.

Once the restore job has been started, the backup agent downloads the selected backup from the configured backup store and prepares the backup by decrypting and unpacking the downloaded archive file.

Finally, the backup agent imports the backup data into the running service instance by connecting to the virtual machine(s) of the service instance.

Configure a Backup's maximum file size

Backups are done using the splitted files method, which allows large files to

be handled more easily. Depending on the backup's size, this creates multiple

files with a maximum expected size, which can be configured with the property

file_size_limit, which is set in bytes, and a maximum size for the chunks

to be uploaded, set in chunk_size, also set in bytes.

The properties file_size_limit and chunk_size can only be changed using the template-uploader-errand.

The best way is to add the following content to the template-custom-ops section in the

template-uploader-errand job.

For example it looks like this for the a9s PostgreSQL templates:

template-uploader:

...

template-custom-ops: |

- type: replace

path: /instance_groups/name=pg/jobs/name=backup-agent/properties/backup-agent/plugins/output?/fog?

value:

local:

file_size_limit: 104857600 # 100 MB in Bytes

chunk_size: 8388608 # 8 MB in Bytes

...

From now on, each Backup Agent has the file_size_limit and chunk_size properties for each newly created

service instance.

These properties can be configured in the backup agent job, more specifically in the service instance manifest as shown below.

properties:

backup-agent:

...

plugins:

...

output:

...

fog:

local:

file_size_limit: 104857600 # 100 MB in Bytes

chunk_size: 8388608 # 8 MB in Bytes

...

If the values are not set, then the properties are assigned default values of

1 GB for file_size_limit and 5 MB for the chunk_size.

Custom Properties

Regular Backup Cycle

The Backup Manager is creating backups in a regular cycle to ensure your data

is saved. To configure the time when a full backup should be created, the

full_backup_repeat property can be set. The configuration works similar to a

cronjob but has limited functionality. Only stars, numbers and intervals are

allowed.

The default value is:

"30 1 * * *"

Description: '(Minute) (Hour) (Day of the month) (Month) (Day of the week)'

Example:

properties:

anynines-backup-manager:

full_backup_repeat: "30 */4 * * *"

You can exclude specific Service Instances from this Backup cycle by changing the instance config with the a9s Service Dashboard API V1 (see Service-Dashboard)

Retention Policy

It is possible to configure a custom retention policy to match them your expectations. Therefore the following properties can be set.

Regular Backup Deletion

Like backup creation the Backup Manager also deletes backups in a regular cycle.

To configure the time when the deletion starts the delete_backup_repeat

property can be set. This only starts the process of deletion, it will take

some time until every backup that can be deleted is deleted. The configuration

works similar to a cronjob but has limited functions. Only stars, numbers and

intervals are allowed.

The default value is:

"30 1 * * *"

Description: '(Minute) (Hour) (Day of the month) (Month) (Day of the week)'

Example:

properties:

anynines-backup-manager:

delete_backup_repeat: "30 */12 * * *"

Minimum Amount of Backups

The minimum amount of backups for any instance, before a backup will be deleted,

can be set with the min_backups_per_instance property. If there are less

backups for an service instance present, backups will not be removed. If you

have more backups than the configured value it is still possible that no backup

is removed because they might not have reached the min_backup_age value.

Backups are only getting deleted if both values are reached.

Default: 5

Example:

properties:

anynines-backup-manager:

min_backups_per_instance: 5

Minimum Age of Backups

The minimum age in days of a backup, before it will be allowed to get deleted,

can be set with the min_backup_age property. If you have older backus than

the configured value it is still possible that no backup is removed because they

might not have reached the min_backups_per_instance value. Backups are only

getting deleted if both values are reached.

Default: 7

Example:

properties:

anynines-backup-manager:

min_backup_age: 7

Retention Policy for Deleted Service Instances

To ensure that backups are getting deleted when a service instance is deleted

the retention_time_for_backups_of_a_deleted_instance property can be set.

This property defines how many days to wait until all backups of this Service

Instance should be deleted.

Before that date is reached the normal retention policy is used.

If it is set to 0, the backups of a deleted service instance will be deleted

with the next run of the "delete old backups" job.

Example:

properties:

anynines-backup-manager:

retention_time_for_backups_of_a_deleted_instance: 30

Configure a Backup's maximum file size

Backups are done using the splitted files method, which allows large files to

be handled more easily. Depending on the backup's size, this creates multiple

files with a maximum expected size, which can be configured with the property

file_size_limit, which is set in bytes, and a maximum size for the chunks

to be uploaded, set in chunk_size, also set in bytes.

The properties file_size_limit and chunk_size can only be changed using the template-uploader-errand.

The best way is to add the following content to the template-custom-ops section in the

template-uploader-errand job.

For example it looks like this for the a9s PostgreSQL templates:

template-uploader:

...

template-custom-ops: |

- type: replace

path: /instance_groups/name=pg/jobs/name=backup-agent/properties/backup-agent/plugins/output?/fog?

value:

local:

file_size_limit: 104857600 # 100 MB in Bytes

chunk_size: 8388608 # 8 MB in Bytes

...

From now on, each Backup Agent has the file_size_limit and chunk_size properties for each newly created

service instance.

These properties can be configured in the backup agent job, more specifically in the service instance manifest as shown below.

properties:

backup-agent:

...

plugins:

...

output:

...

fog:

local:

file_size_limit: 104857600 # 100 MB in Bytes

chunk_size: 8388608 # 8 MB in Bytes

...

If the values are not set, then the properties are assigned default values of

1 GB for file_size_limit and 5 MB for the chunk_size.

Database Configuration

The database can be configured in the a9s Backup Manager by setting the anynines-backup-manager.database BOSH property.

Example:

properties:

anynines-backup-manager:

database:

encoding: utf8

database_name: backupmanager

user_name: backupmanager

password: backupmanager-password

host: backupmanager-host

port: 5432

pool: 10

The properties encoding, port, and pool are optional and they use the values from above as default values.

Service Broker Configuration

The a9s Service Brokers can be configured in the a9s Backup Manager by setting the anynines-backup-manager.service_brokers BOSH property.

We have included Ops files in anynines-deployment

to add the service specific a9s Service Brokers to the configuration.

Furthermore, you can extend this configuration to set a limit on the amount of backups running in parallel, as seen in

the example below. This is a per Data Service limit which is higher in hierarchy than the default global limit

(shared_parallel_backup_tasks), and takes precedence over it.

This parameter is optional and its accepted values are integers > 0, or -1 to deactivate the limit which

is the default value. For more information on this property please refer to

a9s Backup Manager BOSH Properties.

For example it looks like this for the a9s PostgreSQL Service Broker:

properties:

anynines-backup-manager:

service_brokers:

postgresql:

api_endpoint: 'http://postgresql-service-broker.service.dc1.((iaas.consul.domain)):3000'

username: admin

password: brokersecret

parallel_backups_limit: 5

a9s PostgreSQL Specific

Lock Wait Timeout

Logical backups use the tool pg_dumpall to create backups. We set the

--lock-wait-timeout command line argument for pg_dumpall to 1 hour by

default.

A failed backup attempt will be repeated a few times. Therefore a failed backup because of locking issues can take a multitude of 1 hour.

The default value (in milliseconds) can be changed via the BOSH property

backup-agent.plugins.input.postgresql.local.pg_dumpall_lock_wait_timeout.

To change the value for example to 20 seconds, you could apply the following change to the a9s PostgreSQL service manifest:

...

properties:

template-uploader:

template-custom-ops: |

- type: replace

path: /instance_groups/name=pg/jobs/name=backup-agent/properties/backup-agent/plugins/input/postgresql?/local?/pg_dumpall_lock_wait_timeout?

value: 20000

...

Concerns

Memory Usage

The a9s Backup Agent has a monit alert limit for the memory usage:

if totalmem > <backup-agent.thresholds.alert_if_above_mb> Mb then alert

To be able to see if the backup process used too much memory, the monit logs can be

examined. They are located at /var/vcap/monit/monit.log. If the process is exceeding

the memory alert limit, monit will add a newline to this file.

If that happens regularly, it is suggested to update the Service Instance to a plan with

more memory available.

The current memory usage can be seen with the metrics.