a9s MariaDB Cluster Setup Overview

This document provides an overview of the topology and general configuration of an a9s MariaDB cluster instance.

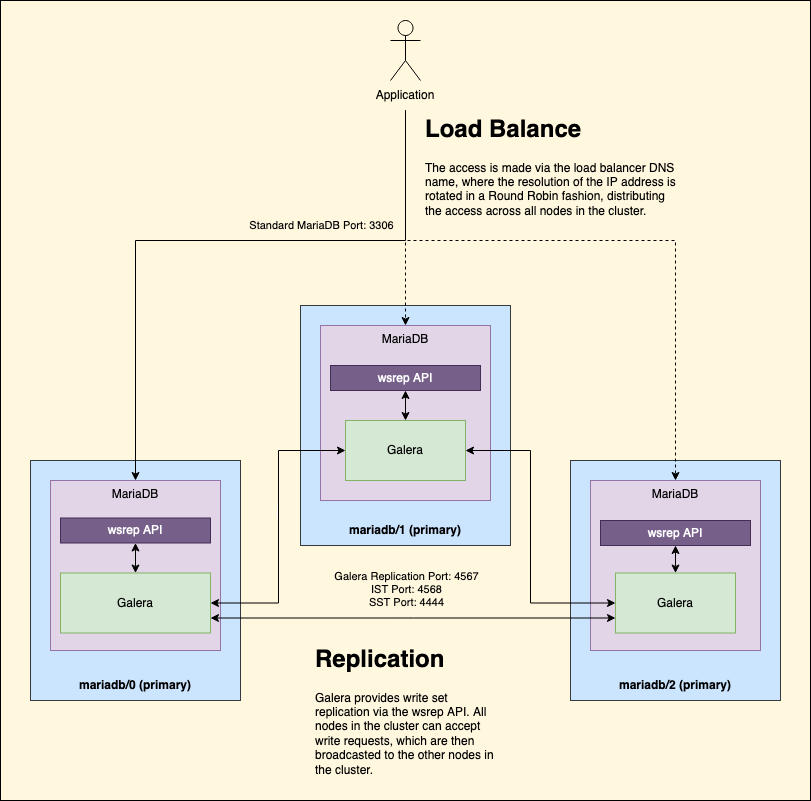

All a9s MariaDB cluster instances run in a synchronous multi-master (also known as multi-primary) replication setup. This is made possible through the use of MariaDB Galera Cluster.

These cluster instances run under a quorum basis, which allows your cluster to be up and running as long as a majority is healthy.

MariaDB Galera Cluster

MariaDB Galera Cluster is a multi-primary cluster for MariaDB. This allows replication to happen at a transaction's commit time, by broadcasting the write set associated with the transaction to every node in the cluster.

Furthermore, MariaDB Galera Cluster enables a9s MariaDB clusters to function under a bi-directional replication; meaning that all nodes in the a9s MariaDB cluster are primaries. Thus, by default, you are provided a 3 node cluster with 3 primaries.

MariaDB Galera Cluster also comes with the following features:

- Synchronous replication

- Active-active multi-primary topology

- Read and write to any cluster node

- Automatic membership control, failed nodes drop from the cluster

- Automatic node joining

- True parallel replication, on row level

Load Balancing

The DNS server responds to requests for the MariaDB cluster address with an array of IPs for all the nodes in the cluster. To achieve load balancing, the DNS server rotates the IPs in the array using a round-robin algorithm. This means that the first IP in the array is the most recent one to be used. So the array always contains all the IPs, but their order changes with each request.

This rotation mechanism may cause issues if the DNS response is cached or processed incorrectly. Make sure to use the first IP in the array and be aware of the caching behavior.

Replication

As mentioned above, the replication process on an a9s MariaDB cluster instance is possible via MariaDB Galera Cluster. This can happen under 2 different methods: State Snapshot Transfers (SST) and Incremental State Transfer (IST).

MariaDB Galera Cluster uses the SST method when a new node is being integrated into the cluster, or when a node has fallen behind and IST cannot recover the missing transactions. In a nutshell, this method provides a full data copy, which is provided by a node serving as a donor to the new/joining node.

Conversely, IST is preferred when the joining node already belonged to the cluster. This method works by identifying the missing transactions, and only sending said transactions instead of the full state. The only caveat, aside from previous membership, is that the missing write-set transactions should be available in the donor's binary logs.

Cluster Health Logger

Periodically logs the wsrep and bin log status to /var/vcap/sys/log/cluster-health-logger/cluster_health.log.

As of the moment of writing this documentation, the logged information summarizes the following queries:

SHOW STATUS WHERE Variable_name like 'wsrep%';

SHOW VARIABLES WHERE Variable_name = 'sql_log_bin';

The connection is made via the local socket.

GRA Log Purger

MariaDB creates files with the debug information for each replication error. These files are never deleted and

therefore they can eventually occupy the whole disk. The gra-log-purger periodically checks for GRA log files older

than 30 days and delete them.

Galera Healthcheck

Provides an HTTP API to retrieve the status of the Galera cluster. This API is only used by the internal components of the cluster.

Time Zone Support

Several tables in the mysql system schema exist to store time zone information. By default The MySQL installation

procedure creates the time zone tables, but does not load them. so in order for the Application Developer to be able

to set and configure the time zones, we are populating the timezone tables during the pre-start process

of each node of the cluster.