Disaster Recovery

Currently, a9s KeyValue, a9s Messaging, a9s MongoDB, and a9s PostgreSQL are the only Data Services that support performing a disaster recovery execution into a newly created Service Instance. All other a9s Data Services will need some manual interaction, which are not specified and may vary from Data Service to Data Service.

Trying to apply the information contained on this document to an a9s Data Service aside from the ones mentioned above can leave your Service Instances in an irrecuperable state.

Introduction

a9s Data Services support disaster recovery via the a9s Backup Manager, which can restore an existing backup into a fresh instance, creating a fork of an already running instance or recovering the last saved state of an unavailable Service Instance including the failure of a whole site or IaaS region.

The requirements for this operation are:

- The Service Instance GUID (e.g.

cf service <service-name> --guid). - The encryption password provided by the Backup Manager: The customer must configure and store the encryption password, but the Platform Operator can also extract this information from the a9s Backup Manager database in case the password is unknown.

- And having access to the backup store where the backups are saved: the secondary store.

While the a9s Backup Framework supports AWS S3 as a backup store, this does not extend to every AWS S3 Storage Class. Currently, the supported AWS S3 storage class is S3 Standard.

Terminology

| Property | Description |

|---|---|

backup store | It is the Cloud Blob storage. It stores the backups. (e.g. the Azure, AWS S3 compliance) |

site | Full a9s Data Service deployment + a chosen blob store. Optional: PaaS (e.g.: Cloud Foundry) |

site catastrophe | The site unavailable. |

primary backup store | Main site blob storage |

secondary backup store | Disaster recovery blob storage |

Overview

When a recovery is executed, the a9s Backup Manager reads the data stored within the secondary site, which has the necessary information in the backup's metadata file to recreate the Service Instance with the most recent data available.

The a9s framework supports a few techniques to reduce downtime in case of different issues. From an instance that needs to be forked to disaster in a complete site, including the backup store.

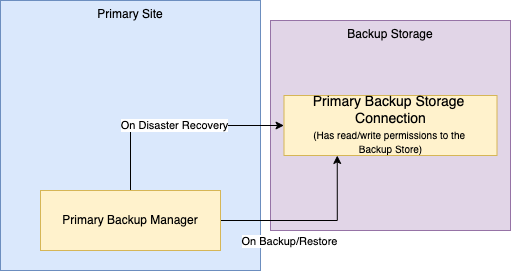

Single Site Single Backup Store: a single a9s Data Services is running and there is only one backup store configured which is also considered the secondary store. It is possible to fork an already existing Service Instance or an Service Instance that has been deleted. This is the default.

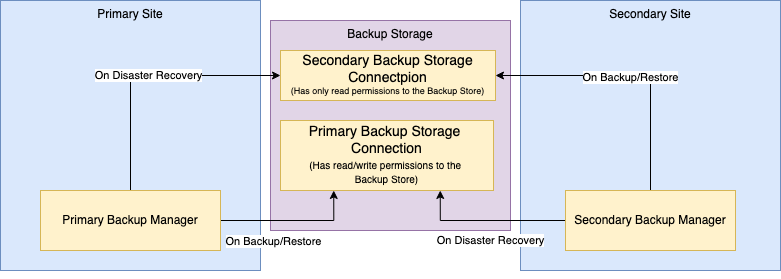

Two Sites Single Backup Store: two a9s Data Services are running, and the clients have access to both of them or have access to the secondary in case of a catastrophe. It is possible to fork a Service Instance on the secondary site even with the primary site unavailable. It does not support failure of the backup store.

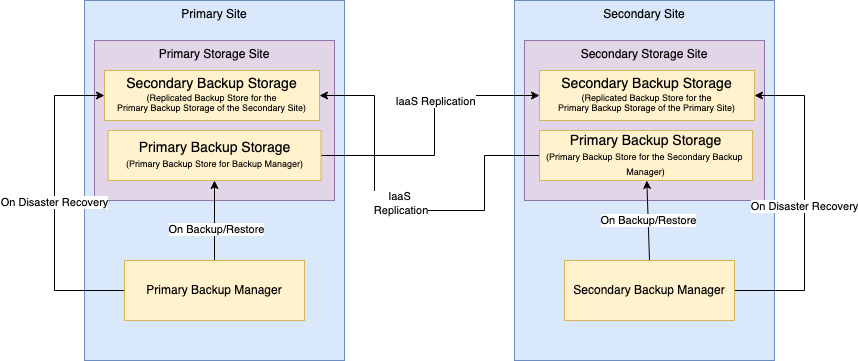

Two Sites Two Backup Stores: two a9s Data Services are running using two different backup stores, where the primary backup store replicates the changes to the secondary, it supports restoring the last saved state of a Service Instance if the whole site or the backup store is unavailable. This is the option that offers a higher level of resilience to catastrophe.

The sites are defined as primary and secondary sites, with the latter being ready to take over whenever the primary site is going through a disaster.

It is only possible to recover the data of an instance if the latest backup is available, and the retention time for backups must be observed. You can find out more about this in the Retention Policy for Deleted Service Instances documentation.

Single Site Single Backup Store

In this approach, a single site stores the backups in a single backup store. It does not support recovery in case of a catastrophe in the site, but it offers the possibility to restore the last saved state of an instance, even if unavailable, into a fresh Service Instance.

The only configuration is the primary backup store configuration:

Example:

properties:

storage:

type: s3

uri: '?bucket=((/backup_bucket))®ion=((/backup_region))'

authentication:

access_key_id: ((/backup_access_key))

secret_access_key: ((/backup_secret_access_key))

In case a9s Backup Agents with versions lower than v147.0.0 are still deployed, the following configuration is also needed.

anynines-backup-manager:

...

plugin_configuration:

fog:

name:

backup: "fog"

restore: "fog"

provider: "AWS"

aws_access_key_id: ((/backup_access_key))

aws_secret_access_key: ((/backup_secret_access_key))

container: ((/backup_bucket))

region: ((/backup_region))

When using AWS IAM Instance Profiles, the aws_access_key_id and aws_secret_access_key parameters MUST be removed from the configuration.

For more information on how to enable this feature, please refer to Using AWS Instance Profiles.

Two Sites Single Backup Store

In this approach, both sites are configured to use the same backup store. It supports the failure of a full site, except for the backup store, by recovering the last saved state of a Service Instance into a secondary site.

The primary site configures the primary store. Example:

properties:

storage:

type: s3

uri: '?bucket=((/backup_bucket))®ion=((/backup_region))'

authentication:

access_key_id: ((/backup_access_key))

secret_access_key: ((/backup_secret_access_key))

In case a9s Backup Agents with versions lower than v147.0.0 are still deployed, the following configuration is also needed.

anynines-backup-manager:

...

plugin_configuration:

fog:

name:

backup: "fog"

restore: "fog"

provider: "AWS"

aws_access_key_id: ((/backup_access_key))

aws_secret_access_key: ((/backup_secret_access_key))

container: ((/backup_bucket))

region: ((/backup_region))

The secondary site configures the secondary store with the same cloud properties from the primary site. Example:

properties:

disaster_recovery_storage:

type: s3

uri: '?bucket=((/backup_bucket_secondary))®ion=((/backup_region_secondary))'

authentication:

access_key_id: ((/backup_access_key_secondary))

secret_access_key: ((/backup_secret_access_key_secondary))

In case a9s Backup Agents with versions lower than v147.0.0 are still deployed, the following configuration is also needed.

anynines-backup-manager:

...

plugin_configuration:

disaster_recovery_backup_store:

name:

backup: "fog"

restore: "fog"

provider: "AWS"

aws_access_key_id: ((/backup_access_key_secondary))

aws_secret_access_key: ((/backup_secret_access_key_secondary))

container: ((/backup_bucket_secondary))

region: ((/backup_region_secondary))

When using AWS IAM Instance Profiles, the aws_access_key_id and aws_secret_access_key parameters MUST be removed from the configuration.

For more information on how to enable this feature, please refer to Using AWS Instance Profiles.

The recovery of an instance on the secondary site is made via the disaster_recovery_backup_store. But any new backup

or restore in this site will be stored in the primary store configured on the secondary site.

We recommend configuring the secondary backup store with read-only access.

Two Site Two Backup Stores

In this approach, each site stores the backup in its own backup store, but the store referenced in the

disaster_recovery_backup_store is configured on the IaaS level to replicate the changes from the secondary store. This

way one site can always recover from the other site as shown below:

In this case, each site has a completely independent setup and it is not aware of the other in any way. Therefore, to be able to carry out a disaster recovery, the primary backup storage from each site replicates itself into an independent backup storage on the secondary site.

The configuration for the primary store should look like:

properties:

storage:

type: s3

uri: '?bucket=((/backup_bucket))®ion=((/backup_region))'

authentication:

access_key_id: ((/backup_access_key))

secret_access_key: ((/backup_secret_access_key))

In case a9s Backup Agents with versions lower than v147.0.0 are still deployed, the following configuration is also needed.

anynines-backup-manager:

...

plugin_configuration:

fog:

name:

backup: "fog"

restore: "fog"

provider: "AWS"

aws_access_key_id: ((/backup_access_key))

aws_secret_access_key: ((/backup_secret_access_key))

container: ((/backup_bucket))

region: ((/backup_region))

The configuration for the secondary store should look like:

properties:

disaster_recovery_storage:

type: s3

uri: '?bucket=((/backup_bucket_secondary))®ion=((/backup_region_secondary))'

authentication:

access_key_id: ((/backup_access_key_secondary))

secret_access_key: ((/backup_secret_access_key_secondary))

In case a9s Backup Agents with versions lower than v147.0.0 are still deployed, the following configuration is also needed.

anynines-backup-manager:

...

plugin_configuration:

disaster_recovery_backup_store:

name:

backup: "fog"

restore: "fog"

provider: "AWS"

aws_access_key_id: ((/backup_access_key_secondary))

aws_secret_access_key: ((/backup_secret_access_key_secondary))

container: ((/backup_bucket_secondary))

region: ((/backup_region_secondary))

When using AWS IAM Instance Profiles, the aws_access_key_id and aws_secret_access_key parameters MUST be removed from the configuration.

For more information on how to enable this feature, please refer to Using AWS Instance Profiles.

The underlying infrastructure must take care of the replication between the primary and secondary backup storage. It

must be set up in the backup store cloud chosen and by the Platform Operator.

As an example, having the configuration example above, the primary site must be configured to allow data replication in

the backup store via the IaaS to copy the data from backup_bucket to backup_bucket_secondary.

We recommend configuring the secondary backup store with read-only access.

Perform Disaster Recovery

To perform a recovery, some information is required:

- The Service Instance's Backup GUID

- The encryption password provided by the Backup Manager

The Application Developer is able to execute the proper disaster recovery as described in Disaster Recovery documentation.

However, it is possible that the owner of the instance does not have this information for any reason. In this case, the Platform Operator can retrieve this information from the a9s Backup Manager database as shown below.

Backup Manager Database

In case the Service Instance doesn't exist anymore or the encryption key is unknown it can be found in the Backup Manager database.

But to find the necessary information the Service Instance GUID (e.g.: cf service <service-name> --guid) or an old

backup file name must be known.

Keep in mind that this only works if the Backup Manager is still available. In the case of a full disaster, this can't be done and the a9s Backup Manager must be restored first.

To get started you need to connect to the Backup Manager VM and start the Rails console as root user:

backup_manager_vm="backup-service" # If using PCF, this is called ancillary-services

bosh -d <deployment-name> ssh ${backup_manager_vm}/0

sudo su -

/var/vcap/jobs/anynines-backup-manager/bin/rails_c

The next steps depend on whether you know the Service Instance GUID or the backup file name.

Service Instance GUID is Known

The following commands need to be performed inside the Rails console:

cf_instance_guid = 'de2fe9e4-6535-423d-8709-dca87df7985c' # cf service <service-name> --guid

instance = Instance.find_by(instance_id: cf_instance_guid)

backup_guid = instance.guid

encryption_key = instance.encryption_key

The backup_guid and encryption_key are the values you need to perform the Disaster Recovery.

Backup File Name is Known

The following commands need to be performed inside the Rails console:

# Change the file name to your backup file name.

backup_file_name = '5c74ccd8-522e-4221-a303-ca561c7a2e75-1617818093610'

backup = Backup.find_by(backup_id: backup_file_name)

instance = backup.instance

backup_guid = instance.guid

encryption_key = instance.encryption_key

The backup_guid and encryption_key are the values you need to perform the disaster recovery.